In this tutorial, I will go through the different types available for creating Backups, which will be essential for your decisicion on which Backup Strategy to go for.

I will mainly focus on Full, Differential and Transaction Log Backups.

FULL DATABASE BACKUPS

This backup type will include all objects (views, procedures, functions...), the tables including the data, users and rights.

A Full Backup enables you to restore the database as it was at the time the Backup was created. Transactions occuring during the Backup process will also be captured.

DIFFERENTIAL BACKUPS

Differential Backups will capture the data that has been altered since the last Full Backup. So when restoring, you will need the Full + Differential Backup files.

An advantage is that Differential Backups are faster than Full Backups (could be a huge difference depending on the size of the Database).

TRANSACTION LOG BACKUPS

Transactions Log Backups will capture all changes that occured since the last transaction log backup. Within this process, a cleaning of the transaction logs happens (a removal of transactions that have been comitted or cancelled).

This Backup Type captures data up to the time the Backup Process was started, whereas Full and Differential Backups also capture transactions that are altered during the backup process.

FILE AND FILEGROUP BACKUPS

This type is usually used for large Databases. Basically specific Files and Filegroups are backed up.

COPY-ONLY BACKUPS

Copy-Only Backups will deliver the same results as a Full Database Backup or Transaction Log backups. The reason for using a Copy-Only Backup is that this Backup type will be ignored, meaning a Copy-Only Backup initiated between a Full and Transactional Backup will not affect the timestamp of the last Full Backup.

You can create a Backup either manually or with T-SQL Command.

If you decide to do it manually, right click on any Database -> Tasks -> Back Up

T-SQL:

--Full Backup

BACKUP DATABASE Databasename TO [YourPath]

--Transactional Backup

BACKUP LOG Databasename TO [YourPath]

--Differential Backup

BACKUP DATABASE Databasename TO [YourPath] WITH DIFFERENTIAL

Select the Database, the Backup Type and Destination

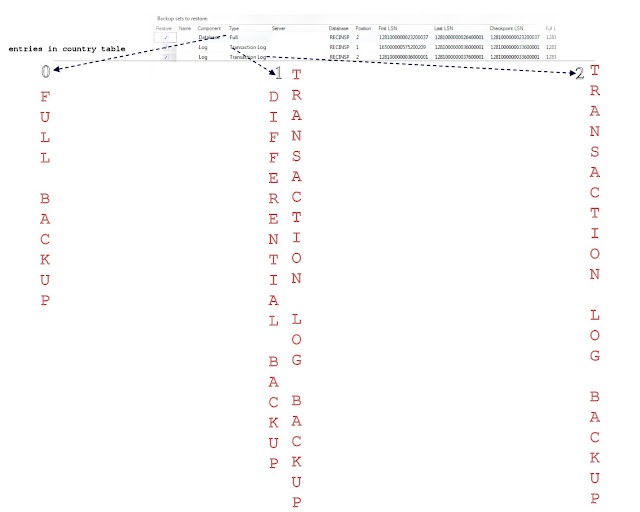

To get a better understanding of the different Backup Types, let's have a look at the table below.

At the time I created the Backup, it appears to be empty:

I inserted 1 country (Sweden) into the table and created a Differential and a Transaction Log Backup.

Finally I inserted another row by adding Netherlands to the table and created another Transaction Log Backup.

RESTORING BACKUPS

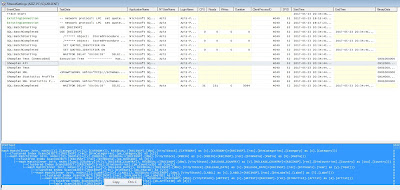

Now that we have created our Backups, will need to restore them. Right click on the Destination Database -> Tasks -> Restore -> Database

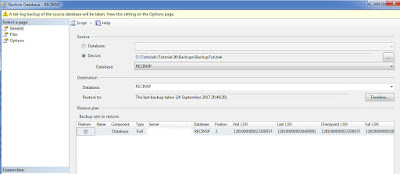

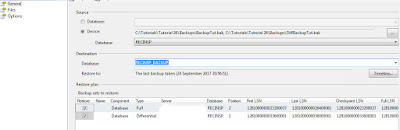

On the General tab, select device and click on the ... button to select location of the stored Backup Files.

You will also need to select a Destination Database.

On the Files tab, ensure your files are pointing to the right folder (and not of the original source)

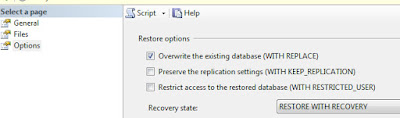

On the Options tab, select Overwrite and RESTORE WITH RECOVERY.

To restore a FULL Backup you need to select the Full Backup File:

To restore a TRANSACTION LOG Backup you need to select the Full Backup File + Transaction Log files:

As you can see in the above picture you have the option to restore to any time where an an Transaction Log Backup was created.

To get a better picture I will summarize the 3 Backup Types in relation to entries in the Country table.