You can find a nice documentation of this topic on the pandas website.

First we will need to import the following packages:

import pandas as pd

import numpy as np

import pyodbc as py

To get our data, we will establish a connection to a SQL server:

conn = py.connect(DRIVER = '{SQL Server}', host = server, Database = 'AW', IntegreatedSecurity = 'yes')

Next we will have to define the SQL query:

sql = 'SELECT so.SalesOrderID \

,st.Name \

,so.OrderDate \

,so.DueDate \

,so.ShipDate \

,so.SalesOrderNumber \

,so.PurchaseOrderNumber \

,so.AccountNumber \

,so.CurrencyRateID \

,so.TotalDue \

FROM Sales.SalesOrderHeader so \

INNER JOIN Sales.SalesTerritory st ON so.TerritoryID = st.TerritoryID'

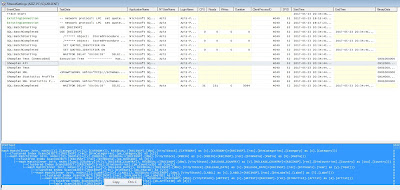

We will execute the query and store the result into a dataframe:

df = pd.read_sql(sql, conn)

print(df.head(5))

WHERE Filter

To filter on a certain column following syntax is used:

dataframe[dataframe['columnname'] == 'value']

print(df[df['SalesOrderNumber'] == 'SO70708'].head(5))

OR + AND Filter

Like in other programming languanges a pipe | is used for OR and a & symbol for AND

print(df[((df['SalesOrderNumber'] == 'SO70708') | (df['DueDate'] == '2008-05-28')) & (df['PurchaseOrder'] <=5 ])

NULL and NOT NULL

To find NULLs or NOT NULLs you simply can use the built in functions isnull() or notnull()

print(df[df['PurchaseOrderNumber'].isnull()])

print(df[df['PurchaseOrderNumber'].notnull()])

GROUP BY

Group By can be applied with the size() function

print(df.groupby('PurchaseOrderNumber').size())

It is possible to use mutliple aggregate funcions at once in connection with group by:

print(df.groupby('AccountNumber').agg({'TotalDue' : [np.mean, np.sum], 'AccountNumber' : np.size}))

JOINS

Now, let's move to the core element of SQL...Joins!

This can be achieved in Python with the merge function.

For better demonstration, I will create 2 simple data frames and show how to add an index and how to write a inner, left, right and full outer join in Python.

#Inner Join

df1 = pd.DataFrame({'key': ['A', 'B', 'C', 'D', 'E']})

df2 = pd.DataFrame({'key': ['B', 'C', 'D', 'D', 'F']})

print(pd.merge(df1, df2, on = 'key'))

#Left Outer Join

print(pd.merge(df1, df2, on = 'key', how = 'left'))

#Right Join

print(pd.merge(df1, df2, on = 'key', how = 'right'))

#Full Join

print(pd.merge(df1, df2, on = 'key', how = 'outer'))

UNION (ALL)

The function concat() will append the dataframes listed. This will deliver the same result as UNION ALL in SQL. If you want to get the same result as what an UNION does, add drop_duplicates() after the concat() function.

#Union all

print(pd.concat([df1, df2]))

#Union

print(pd.concat([df1, df2]).drop_duplicates())